Daily Reports » History » Revision 62

« Previous |

Revision 62/63

(diff)

| Next »

Jixie Zhang, 01/26/2021 01:47 AM

Daily Reports¶

run plan document on [[https://docs.google.com/document/d/1Bmag_YPrl1szxLhYyA4eLUP2PV_pM0x51pHbeCj2hL4/edit?usp=sharing]]

*FTBF secondary beam composition at [[https://ftbf.fnal.gov/particle-composition-in-mtest/]]

| Current status | ||||

|---|---|---|---|---|

| Beam | time(h) | run range | trigger# | status |

| Energy scan | 8 | (804-822) | done | |

| 1GeV | 24 | (823-847), (1035-1056) | 3.1M | done |

| 6GeV | 16 | (848-857), (1062-1066) | 2.8M | done |

| 12GeV | 24 | (867-902) | 2.9M | done |

| 16GeV | 8 | (924-944) | 7.6M | done |

| 2GeV | 24 | (945-969), (1057-1061) | 2.9M | done |

| 4GeV | 24 | (970-1011) | 4.5M | done |

| 8GeV | 20 | (1012-1033) | 5.5M | done |

| 10GeV | 8 | (1066-1095) | 6.8M | done |

[[https://logbooks.jlab.org/files/2021/01/3864653/TriggerCounts.png]]

daily report 1/25/2021 -- by Jixie

- Last day of beam time, will be ended by 20:00

- 03:04 collected additional 3.0M triggers for 1 GeV

- 05:26 collected additional 1.6M triggers for 2 GeV

- 07:46 collected additional 1.5M triggers for 6 GeV

- 07:56 started taking data for 10 GeV

- 18:35 started taking calibration data for 10GeV

- 20:00 collected 6.8M triggers for 10 GeV.

We finished the experiment!

daily report 1/24/2021 -- by Jixie

- Verified that the Cherenkov signal we connected to the FADC is really the raw signal, not the signal after DSC. No mistake was made. We were just misled by the noise. (https://logbooks.jlab.org/entry/3864278)

- Finished taking -8 GeV data. Run range is 1012-1033

- Started taking -10 GeV data. But MCR can not tuned the beam for us, only ~2000 per spill. Stopped after 2 hours of trial.

- Switched to take 1 GeV data. Only ~180 triggers per spill. Took data at this rate for 8 hours.

- 8:20 PM, MCR has experienced crew at this shift. They tuned their machine to deliver ~3.5k triggers per spill.

We will take data at -1 GeV/c till 3AM tomorrow then do another beam energy scan. - Scripts to do Cherenkov cut is ready

daily report 1/23/2021 -- by Jixie

- Finished taking data for -4 GeV by 8:25 AM, including calibration data. Run range is 970-1012.

- Working on making auto logentry from soliddaq, need to install certification

- Found Cherenkov signal, they are much smaller than their noise. Use a timing cut can see them.

- MCR put a wrong angle on the septa which caused very low trigger rate. After they fixed this mistake we received 12k trigger per spill.

- Fixed the internet problem of clrlpc

- 8:30 AM: HVs are off and detectors are moved away from the beam line

- 20:00 ATLAS group agree to exchange 2 day shifts with our 2 night shifts. From now on, we have 48 hours of beam time.

Xinzhan, Manoj and Jixie will do 8-hours-shift rotation. We might need some help to do remote. - Started taking -8 GeV data.

- Beam spot for -8 GeV is 6x6, found a lot of pile up. Requested MCR to lower the beam intensity to ~9000 triggers per spill.

daily report 1/22/2021 -- by Jixie

- Continued taking data with -4 GeV

- MCR took almost 4 hours to tune the beam to deliver from 600 triggers per spill to 1800 triggers per spill. This is

also depend on who is on shift. We are losing a lot of potential triggers due to the fact that MCR have to switch beam

between primary proton and secondary electron. - continued studying Cherenkov signal. We viewed signals in the oscilloscope, trying to find out the timing between

Cherenkov signal and ftbf_trigger. - MCR took 2 hours to tune the beam to deliver 1800 trigger per spill in the event

- clrlpc lost public internet connection. Jixie is working on it.

- vnc server started in clrlpc (session :1), but only allow itself to visit. If a client wants to view this vnc,

two ssh tunnel and port forward must be done by the client. The network route is: client -> soliddaq -> clrlpc.

daily report 1/21/2021 -- by Jixie

- taking 4 GeV data

- MWPC2 still have same dead wires, Evan will replace readout chips in daytime during beam is off.

- Beam is off from 6 AM,

- MWPC decode program is ready to write raw data and reconstructed hit into root tree

- Turned HV off, lower the detector from beam line to avoid hitting by 120 GeV proton beam in daytime

- Possible impact from the ATLAS group, who are taking day beam time (1/20 ~1/27) to test their 'telescope detectors'.

- Their setup includes 3 silicon detectors in a row then one center detector then another 3 silicon detectors in a row.

- The silicon detectors have a 4x4 cm window. The window frame is 3/4 inches thick aluminum.

- Their center detector is an aluminum box (about 1cm for the wall thickness) has a 2x2 inches window to allow beam pass through.

- The sensor of the center detector is 1x1 mm only, therefore they have to align the sensor to the beam line carefully. They want to stay in the beam line for the whole week in order to avoid position alignment (cost ~2 hours each time). If they push their setup away from the beam line, they need to redo the position alignment.

- We are taking 4, 8 and 10 GeV data this week. The beam spot size is as large as 12 x 12 cm for 4 GeV. We have to ask ATLAS group to push their setup away from beam line during 4 GeV data taking. From 8 and 10 GeV, we might let them keep only the center detector in the beam line, removing all other 6 silicon detectors.

- Updated: ATLAS group want to stick with the original plan, we will request them push their detector away from the beamline

- Evan confirmed that we were connecting the Cherenkov logical signal after the discriminator. We changed these 3 channels to the raw signal from PMT.

We found that the upstream Cherenkov is saturated, we lower its HV from 1700 to 1640 - Continued studying the Cherenkov signal

- Had control access twice to replace MWPC1 readout chips

daily report 1/20/2021 -- by Jixie

- Our beam time changed to night time from today: from 8PM to 8AM next day.

- MWPC2 will be swapped in day time (too many dead wires)

- Beam was off from 7 AM, expect to be back by 6 PM

- Ecal decode program was modified to remove unused branches

- Finished taking 2 GeV data by 23:35

- Started taking 4 GeV data, plan to take 24 hours

- Started look into Cherenkov signal, it has only one single peak in the FADC it seem like a logical signal after the discriminator

daily report 1/19/2021 -- by Jixie

- Production data of 16 GeV started from run 930, which is launched at 00:30 (AM).

- Finished taking 16 GeV data.

- Started taking 2 GeV data from 08:45 AM, Plan to take 24 hours at this energy

- 2GeV beam spot is much worse than 16 GeV. MCR can do very little at this low energy

- Requested MCR to push number of particle at MT6SC1, it can reach 40K. Trigger counts per spill is about 1300

- Got confirmed from Evan that MWPC2 will be swapped in Wednesday day

daily report 1/18/2021 -- by Jixie

- Finished taking 12 GeV data including calibration data(903,904,905). Run range is 867-905

- Started taking 16 GeV data. HVs for this energy were determined. We plan to take 8 hours of data, plus 1 hour calibration.

- Calibration data for 16 GeV are ready(run 924-929).

- Requested MCR to control the beam spot size to 6 cm diameter. After that we determine the new coordinate of the center of the 3 Ecal modules.

daily report 1/17/2021 -- by Jixie

- Automation on logentry works now. More and more analysis scripts are ready to use

- Finished taking 6 GeV data, run number range is 848 - 866

- All beam energy of <=8 GeV will use 8GeV HV setting. Finihsed taking calibration data (run 853,854,855) for this setting.

- 16 GeV beam is available, and its trigger rate can reach 15K per spill, which is almost 10 times at all lower energies. We could not take this beam energy until a 3rd HV module is set up.

- Started taking 12 GeV data from ~2PM. The plan is to take 24 hours of data, plus 2 hours of calibration data.

- Motion table position is optimized to let the beam hit the center of 3 blocks.

- scripts for backing up data and transfer data to JLab volitile is ready. Data will be backed up several time a day.

daily report 1/16/2021 -- by Jixie

- Beam energy scan was finished on Jan 15, HV for each beam energy are determined

- Finished 24 hours of 1 GeV data taking using 8GeV HV setting. We forgot to change it back. Since detector is not saturated, do not bother to change it. Run number range is 823 - 847.

- Start 6 GeV data taking by run number 848. Plan to take 18 hours of data.

- Working on making automatic start|end of run log entry.

- MWPC decoder and analysis program works. beam spot size is huge (9cm x 8 cm).

daily report 1/15/2021 -- by Xiaochao from far away

- good gracious, we keep getting 24 hours a day

- we should keep our dialy work hours below 16 and stretch shifts to 8 hours

- rate scan is done up to 12 GeV energy. And we are now taking 1 GeV data

- the trigger is from FTBF's 3 scintillator paddles coincidence with the spill signal. FTBF's 2-chamber Cherenkov signals are not in the trigger but are in our FADCs.

- MWPC has problems: 1 was fixed today (hardware) and both are working and online, daq is working and synced with our daq. But the software is missing a library so we can't decode the data.

- According to David's post on Slack:

daily report 1/14/2021 -- by Xiaochao from far awayDavid Flay 2:20 PM

ok, using the rates above, I find for our remaining 11 days:

2:20

E = 12.0 GeV, numShifts = 1.3, totEvt = 16356343

E = 10.0 GeV, numShifts = 0.8, totEvt = 16356343

E = 8.0 GeV, numShifts = 0.7, totEvt = 16356343

E = 6.0 GeV, numShifts = 0.8, totEvt = 16356343

E = 1.0 GeV, numShifts = 14.7, totEvt = 16356343

E = 2.0 GeV, numShifts = 2.5, totEvt = 16356343

E = 4.0 GeV, numShifts = 1.2, totEvt = 16356343

2:21

clearly that 14.7 shifts doesn’t make sense.

David Flay 2:27 PM

I think it checks out;

2:27

Total time: 264.0 hrs

E = 12.0 GeV, weight = 0.061, time = 16.1 hrs, numShifts = 1.3, totEvt = 16356343

E = 10.0 GeV, weight = 0.038, time = 10.0 hrs, numShifts = 0.8, totEvt = 16356343

E = 8.0 GeV, weight = 0.032, time = 8.5 hrs, numShifts = 0.7, totEvt = 16356343

E = 6.0 GeV, weight = 0.038, time = 9.9 hrs, numShifts = 0.8, totEvt = 16356343

E = 1.0 GeV, weight = 0.666, time = 175.9 hrs, numShifts = 14.7, totEvt = 16356343

E = 2.0 GeV, weight = 0.112, time = 29.5 hrs, numShifts = 2.5, totEvt = 16356343

E = 4.0 GeV, weight = 0.053, time = 14.0 hrs, numShifts = 1.2, totEvt = 16356343

Totals:

Time = 264.0 hrs

Shifts = 22.0

- beam started sometime in the evening.

- we got the night time beam as a bonus - the other group does not need it - causing us to scramble the shift schedule. Paul Reimer came in for the night shift, until 3am - thanks!

- by midnight, we are sending beam to center of each block, trying to adjust HV/gain-match;

- Jixie adjusted Cherenkov pressure around 10pm. Previously the two chambers were set at 4.0 and 0.2 psia, respectively. I think Jixie lowered one of them from 4.0 to 0.8

- complete gain/HV-matching of the 3 shashlyk modules

- center beam at the middle of the 3-block (a little off-center may be ideal, better than at the exact center) and do energy scan over night to figure out rates

- David will rework the runplan during the day

- One of the MWPC is broken, will be installed during the day

daily report 1/13/2021

A busy and tired day! At least our DAQ is ready to take beam with our own trigger!

- Start installation from 7AM. Finished installing detectors and cabling by 1PM

- Put the rack in the control room so no access needed for daq work. Our daq machines are also in the control room.

- David came to site. Help a lot in setting up computers. Xinzhan and I are working on detectors and daq cabling. Both desktops are online. CODA runs well.

- Operational Readiness Clearance (ORC) meeting started from 1 PM. Detectors are all good. However, JLab made VME cards were suggested to change fuse at the beginning...... After some long negotiation, we were requested to put kapton tape to cover the power interface. We also received some suggestions to run the experiment safely. We might get all signature by tomorrow

- We connected HV and signal cables to the patch panel. All PMT signals have been checked using an oscilloscope. All detectors work well. We do not have enough time to modify daq to take the FTBF trigger. FTBF has 3 channels for Cherenkov, none of them add into our FADC yet. (Need to find cable and do fan in fan out...) MWPC daq does not have a veto input, it can not take our busy status immediately. We have to form a new trigger then feed it. We will do this in the morning of Thursday.

- Accelerator is down. One magnet is not functioning. They are waiting for the radiation to cool down so they can fix it tomorrow morning. Beam might be available in the afternoon.

- Do FTBF controlled access leader training in Thursday morning 9AM

- MWPC daq is a well integrated machine (looks like a laptop). It does not provide L1A signal. We need to learn deep about this system to find out a possible way to take their L1A. We will then veto it using out busy status. Need to spend time here Thursday.

- connect cherenkov into our FADC.

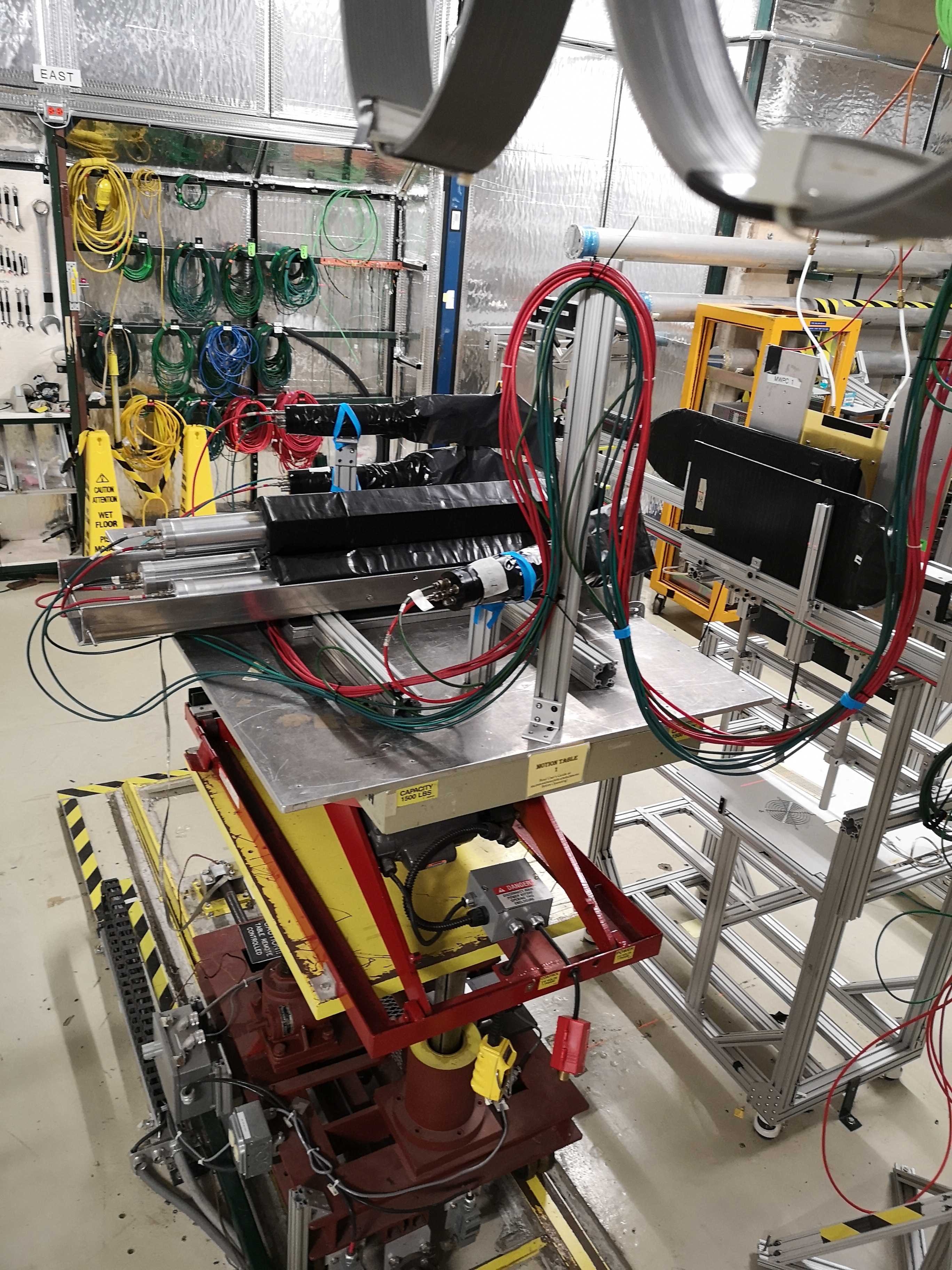

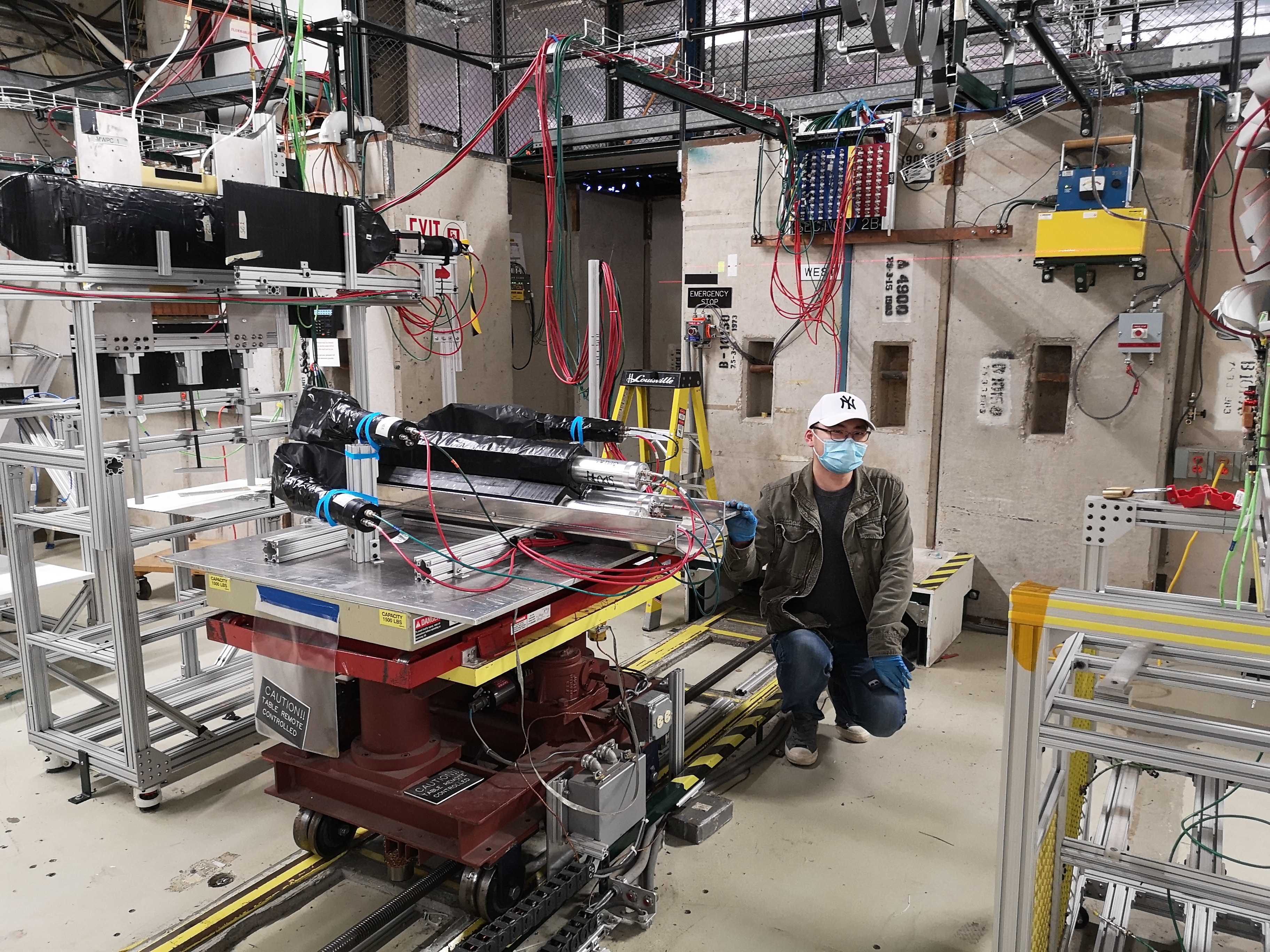

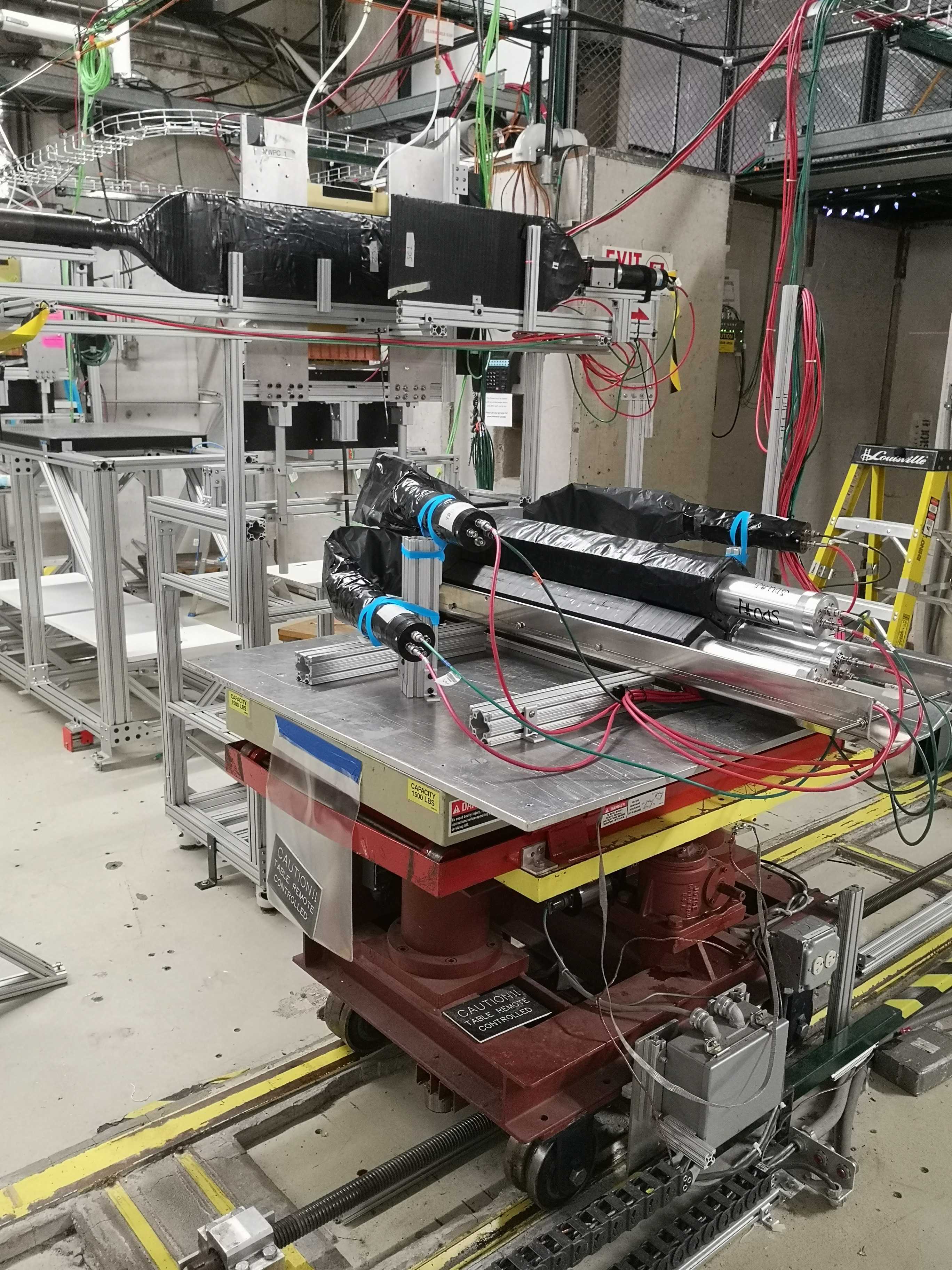

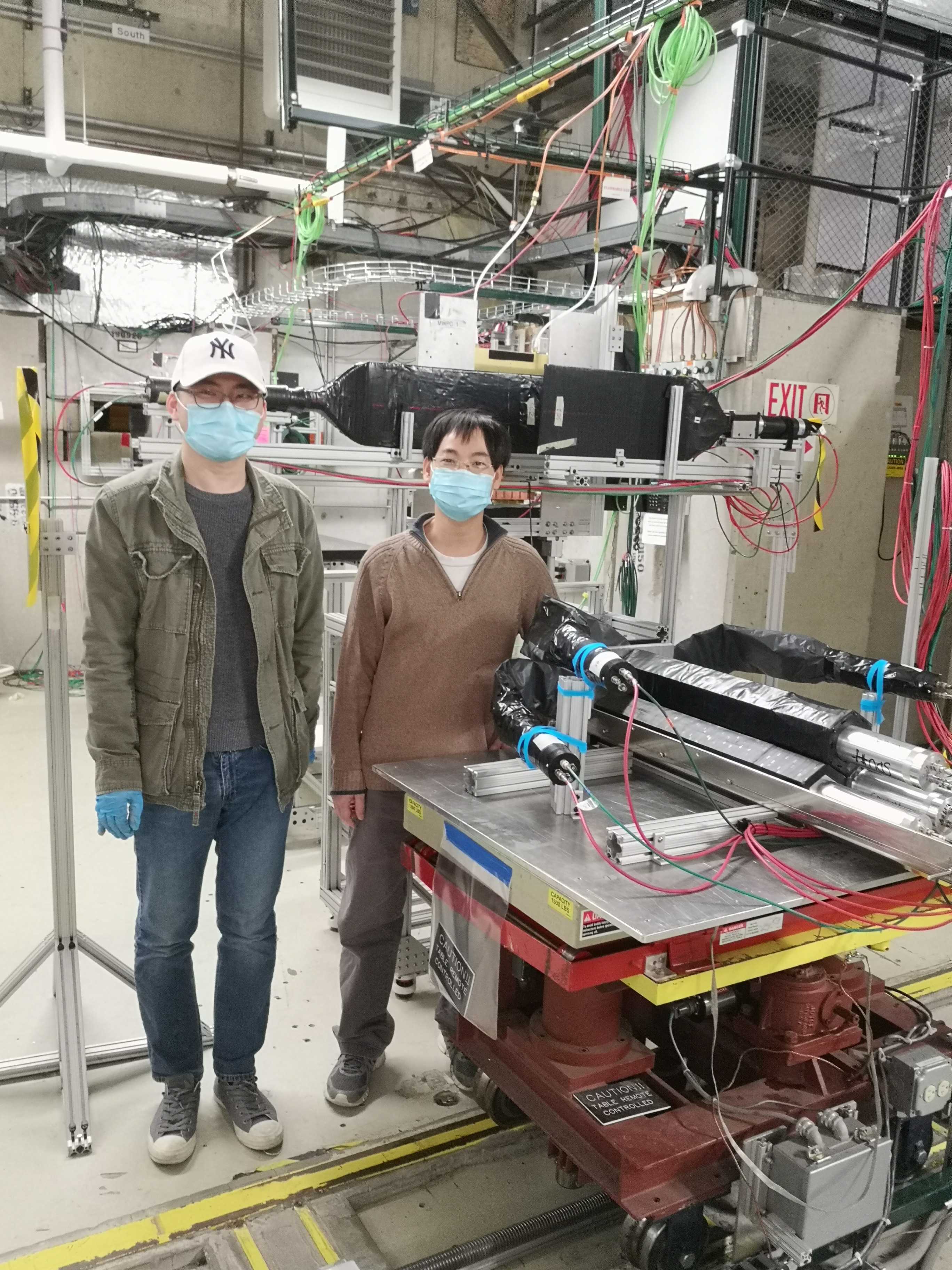

Pictures under useful pictures with prefix 20200113_xx

- Took radiation practical training, both of Jixie and Xinzhan

- Met Lorenzo, have a long and deep conversation with him. He helped us setting up the gateway machine, which is soliddaq. Right now solidaq has 2 network interfaces, one of them is borrowed from FTBF. soliddaq can access the public network, it also serves as the gateway of the private network. Because IP forward function is not enabled, right now private network members can not access public networks yet. (I need help from an expert to enable this.)

- If you want to access to clrlpc, you have to access to solidaq using fermilab linux account, then ssh from soliddaq into clrlpc using a-compton account. If you want to do that, send me your fermilab username. I can add your username into the name lists that allows you to login soliddaq using a-compton account.

- David brought the hard drive and pcie to usb3.1 convert card here. Two 5T hard drive (2.5") were installed into the usb hub provided by Alex. Tested the data transfer speed. It can reach 130MB/s when the CPU is idle. Data transfer speed changes a lot when coda is running.

- JLab experts are working on DSC issues. The Fan-In-Fan-out module degrades the input signal too much, which is part of the reason that DSC lost signal. We borrowed one from FTBF. JLab experts are also checking and fixing the software. Right now we can see TDC in the output file. Alex is still working on it to make sure it does not lose data.

- David is working on ORC form.

- make a copy of data into soliddaq, this copy will also be our backup of all raw data

- sent data from soliddaq to jlab

- The beam will be off by 6AM Wednesday. We will start installation from 7 AM. We aim to finish cabling and installation by 1 PM.

- ROC inspection is scheduled at 1 PM Wednesday. I will show in person. David will join remotely.

- The beam is scheduled to be delivered by 5:30 PM. Look forward to taking beam! (edited)

- Put all our computers into a private network switch, use ip 192.168.1.x, reserve IP 192.168.1.1 for the gateway. All computers can talk to each other. Still waiting for FTBF to provide a gateway. Talked to Evan and Lorenzo. They understanded our request and will set up one tomorrow.

- Rebooted vme crate.. The hostname 'intelvmeha8' came back. Start and stop coda smoothly, no need to manually start ROC2.

- DSC did not write data to the output file. Experts at Jlab are working on this.

- Talked to Evan about MWPC daq. Learned that we can easily take their trigger, which is SC1&SC2 (both stay at FTBF beam line). We decided to take the L1A signal from MWPC daq to be our trigger. In this way the events from our daq are already synchronized with MWPC daq. We will also put our SCs into the FADC, they just do not serve as triggers anymore. We will also plug Cherenkov signal into our FADC. We will also put a copy of MWPC daq's L1A into our TDC.

- David is preparing the ORC document

- Had a meeting with Lorenzo, we agreed to start shift at 8 AM|PM.

- install kerberos kit in clrlpc. We will not use it during data taking but can use it if needed. Only people with a Fermilab linux account can access it.

- taped the lead block.

- get a gateway

- solve DSC

- look at raw data file of MWPC daq, try to understand their daq

- pause daq data taking to test 3 preshowers. They are all good

- continued testing TDC configuration. Looked at TDC signal in raw data file. improving/updating coda and decode program under the guidance of Alex.

- taking cosmic data. right now daq machine clrlpc is offline

- finish setting up private network

- tape the lead block

- practice sending data back to jlab

- take training on Tuesday

- Finished installing kerberos kit into soliddaq desktop (the backup one)

- zoom meeting with Alex, we are now able to run coda only if we do not follow Fermilab kerberos ssh requirements. A trick is that we used jlab configuration to start coda and all rocs, when coda runs we switch to fermilab configuration. This is not a solution! We have to put daq into a private network.

- Found a 1000Mbps switch and several cat5e cables. Cat5e cable's speed can reach 1000 Mbps, while cat6's is 10Gbps. Will be better if there are enough cat6 cables. Plug 2 laptops into the switch, manually specify IP and gateway, 2 laptops can talk to each other. I tried to set up my laptop (windows10) as the gateway and using WIFI to connect to the fermilab public network. Failed!

- Ask Fermilab to provide a gateway. (The backup daq machine must be identical to the main daq machine.

- To transfer data to JLab, I will send data using the backup daq to Jlab work disk.

- Alex, please help me to find out which disk I can write raw data to, total space no more than 14T.

- Request FTBF to provide a gateway, finish setting up the private network

- CODA roc2 need to be start manually, need to fix that

- work out a script to transfer data

- waiting for HA approval then tape the lead

- test preshowers when a 2nd HV module is available. (edited)

- both desktops got blocked because password authority is not allowed. Solved it after reconfigured sshd_config file.

- applied host principles for both desktops. Got password in the afternoon but have typed a wrong host name for the main daq machine. Reapplied and now waiting for supervisor's approval. The backup machine (soliddaq) is ready to install kerberos kit but do not have time to do it.

- connected daq system. hooked SCs and Ecals into daq. viewed trigger signals. ready to take cosmic data. Preshowers are not connected yet.

- hooked vme directly to clrlpc, configured them to talk to each other. 2 machines can talk now.

- Alex helped thought zoom meeting. After some modifications, Alex can run coda to take events. But I could not run it by myself because some configuration changes that Alex did might not be saved.

- Paul came to help. We are trying to solve the network issue. Desktop clrlpc has a puppet service to overwrite sshd_config frequently. Therefore all my modifications is gone. We have to solve it ASAP otherwise this machine will be blocked again once the Fermilab robot catches it.

Service puppet was temporary disabled. - Rearranged detectors such that 2 SCs and Ecals are in the same line.

- My laptop got blocked due to use X11 service......

- Install kerberos kit

- configure coda

- test preshowers

- tape the lead plate (edited)

- installed both the nim and the vme crates onto a movable rack

- learned how to use FTBF's HV module. This module can only supply HV in range from the set point subtract 580V to the set point. For example, If a set point is 2500V, the HV range is 1920-2500. In our case, we need to set ecal at 900V, SC at 1300V, and preshowers at 2100V. We have to use two HV module.

- We look at the signals for all 3 ecal and 2 SC. We didn't damage them during the bumpy driving. The signals looks good.

- We installed 3 ecal modules into the tray. Carefully addjust their positions to make sure them attach to each other tightly.

- registered both desktops, clrlpc and soliddaq, to Fermilab public network. The latter is a back up for daq machine. Both of them are now online. If you have a computer account of Fermilab, you can ssh to it using account a-compton.

- test preshower detectors

- applying a computer account so I can access daq machine from apartment

- connect daq system to detectors

- tape the lead plate after HA got approved (edited)

Updated by Jixie Zhang about 5 years ago · 62 revisions